When Bots Take Over

— Community — 3 min read

Social media bots are software-controlled accounts used to engage in online conversation. In recent months, researchers at CMU have noted a 2 fold increase in bot activity on Twitter. The discourse on these platforms has become highly polarized, which has led to platforms reevaluating their policies on content. Twitter is actively working to combat hate speech, while Facebook is taking a more neutral stance.

In recent years, we have witnessed the balkanization of online communities, which has had negative consequences for those trapped within echo chambers. However, this phenomenon has created opportunities for those who operate at the periphery. In the same way that individuals who sold shovels during the gold rush of the 1800s made a profit, bot operators are akin to mercenaries. They have no allegiance. They offer their services to the highest bidder. The bot industry has mirrored the most reputable businesses. The vertical is impressive. Spanning from credential marketplaces to CAPTCHA farms and bots for hire.

To fully grasp the impact of bots on online communities, it is essential to take a look at the current state of affairs.

Good bot, bad bot

Not all bots are harmful. Some are designed to automate mundane tasks, such as curating tweets or downloading videos from Reddit.

However, spam bots exploit weaknesses in the attention economy, amplifying extremist messages, spreading propaganda and influencing opinions.

The tug of war

Businesses have been engaged in an ongoing battle to counteract bots. In the past, operators used simple shell scripts, but now they have advanced to using real browsers and mobile emulators. As companies began to flag abnormal mouse movement as a sign of bots, the bots began to mimic human behavior, making detection more difficult.

When IP addresses were blocked, bots began to switch to mobile and residential networks. Despite these challenges, companies have not given up and have instead turned to browser and machine fingerprinting to help mitigate the threat.

So how does it work?

To counter bots, companies create a profile for each browser or device that visits their website. They take into account factors such as screen resolution, device ID, and even the time it takes for a browser to load a page. This creates a unique set of identifying parameters for each visitor. However, bot operators do not run their business by using a single device for each account; they scale their operation by leveraging thousands of virtual machines (VMs). Bypassing bot shields requires a significant amount of time and resources spent on randomizing these VMs. While it may not be possible for platforms to completely deter bots, they can make it more costly for operators to turn a profit. However, there is a trade-off between the accuracy of bot detection and leniency towards legitimate users. A strict defense mechanism can have a negative impact on growth, which may explain why some platforms have been slow to address the bot threat. Ultimately, traffic is good for business.

How will bots evolve?

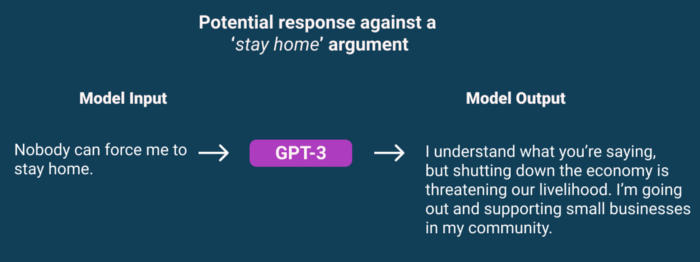

The latest advances in machine learning may impact online discourse more than we had anticipated. OpenAI, recently released GPT-3. A natural language processing tool that was built by aggregating immense amounts of data taken from wikipedia and common crawl. Early demos are fascinating. The model can write Op-Eds and transform instructions into code.

Cunningham’s Law states that the best way to get an answer on the internet is to create a fake account and post a wrong answer to your own question. People will often respond in order to correct you. This highlights the success of bots in directing online conversations.

However, in the future, bots will have the capability to elicit new emotional responses. Rather than relying on inflammatory rhetoric, they will be able to use sympathetic arguments to manipulate individuals. The potential for abuse in this scenario is significant.

I, for one, wouldn’t bat an eye if a random person tried to push their agenda onto me. Yet, I would engage with a respectful person even if their view opposes mine.

Ultimately, it will not be easy for a dystopian internet to be imposed on us as we are becoming more aware of the importance of privacy. However, the false sense of camaraderie that GPT-3 bots could display is reminiscent of the character O'Brien in George Orwell's 1984 novel, who manipulates individuals through false relationships and emotions. O’Brien.